Note: This post is from 2010, and browsers/libraries have changed a lot since then. Please don’t use any of this information to make decisions about how to write code.

In my previous post, Investigating JavaScript Array Iteration Performance, I found that among a selection of different array iteration methods, jQuery’s each function was the slowest. It’s worth mentioning again that these investigations are pretty academic - array iteration and looping speed is unlikely to be the source of performance problems compared to actual program logic, DOM manipulation, string manipulation, etc. I just found it interesting to poke into how things work in different browsers. That said, with the recent release of jQuery 1.4 emphasizing performance so much, I wanted to see what if anything could be done to speed up each (which is used inside jQuery all over the place), and whether it would made much of a difference.

Again, the details are after the jump.

For reference, here’s the original implementation of jQuery.each from jQuery 1.3.2 (it hasn’t changed much for 1.4):

function( object, callback, args ) {

var name, i = 0, length = object.length;

if ( args ) {

... omitted ...

} else {

if ( length === undefined ) {

... omitted ...

} else

for ( var value = object[0]; i < length && callback.call( value, i, value ) !== false; value = object[++i] ){}

}

return object;

}

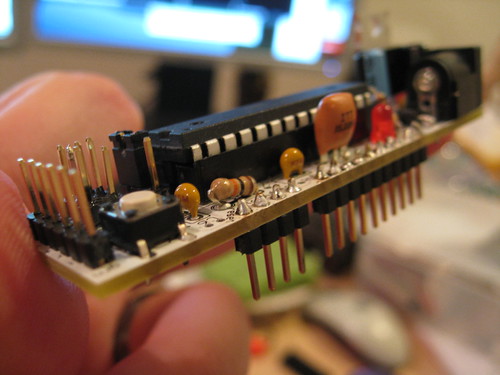

I cut out some pieces relating to iterating over Objects instead of Arrays, and some internal-only code, just for brevity. You can see that at its core, each just iterates over the array with a regular for loop and calls the provided callback for each element. It’s using the call function to invoke the callback so it can set this to the value of each element in the array in turn, and passes the index in the array and the value at that index as parameters to the callback as well.

I ended up trying four different modifications of jQuery’s each function. I also allowed myself to actually change the signature of each, which would likely break much existing code written on top of jQuery, but it gave me a lot more freedom to tweak things.

The first was to try using native Array.forEach (where available). I had to pass in my own callback to forEach that reversed the order of the index and value arguments to the function, since jQuery.each and Array.forEach put those arguments in opposite order. Of course, I had to fall back on the original for loop implementation for IE. This modification retains the complete behavior from the original implementation.

if (jQuery.isFunction(object.forEach) ) {

object.forEach(function(value, i) {

callback.call(value, i, value);

});

}

else {

for (var value = object[0]; i < length && callback.call(value, i, value) !== false; value = object[++i]) {}

}

Next, I tried skipping the callback that switches the order of arguments and just passing the user’s callback directly to forEach. I had to modify the fallback to match this. Notice in both cases we no longer set this to the current element in the iteration - Array.forEach doesn’t support that directly. We’re solidly in non-backwards-compatible change territory here.

if (jQuery.isFunction(object.forEach) ) {

object.forEach(callback);

}

else {

for (var value = object[0]; i < length && callback.call(null, value, i) !== false; value = object[++i]) {}

}

I noticed in testing this out that Firefox seems to really struggle with using call with a frequently-changing value for this (the first parameter) so I tried another variation that didn’t use native Array.forEach but just didn’t change this in the call (I let it be the whole array each time):

for (var value = object[0]; i < length && callback.call(object, i, value) !== false; value = object[++i]) {}

After that, I wondered why use call at all (I might be missing something important here about how JavaScript function invocation works - please correct me!) So I tried a version that just called the callback directly.

for (var value = object[0]; i < length && callback(i, value) !== value = object[++i]) {}

With these four variations, I went and tested how long it took for them to iterate over a 500,000 element array in different browsers. In the previous tests I used 100,000 elements but the tests completed too fast to get meaningful results (which should tell you how fast this stuff is to begin with!). As in the previous post, the absolute numbers don’t really mean much - it’s the comparison between the different approaches that matters.

Time to iterate over an array of 500,000 integers

|

jQuery.each |

Array.forEach (same signature as jQuery) |

Array.forEach (native signature) |

Unvarying ‘this’ |

No call |

| Firefox 3.5 |

1,358ms |

1,591ms |

371ms |

576ms |

469ms |

| Firefox 3.6rc2 |

546ms |

672ms |

201ms |

194ms |

109ms |

| Firefox 3.7a1pre |

524ms |

641ms |

173ms |

102ms |

301ms |

| Chrome 3 |

81ms |

94ms |

41ms |

38ms |

35ms |

| Safari 4 |

54ms |

102ms |

102ms |

69ms |

56ms |

| IE 8 |

789ms |

759ms |

693ms |

741ms |

476ms |

| Opera 10.10 |

451ms |

703ms |

286ms |

305ms |

228ms |

We find that Firefox 3.6 improves over Firefox 3.5, IE is slow no matter what (though faster than Firefox 3.5 for vanilla jQuery.each), and the Webkit browsers are both very fast. What’s more interesting is to look at each time as a percentage of the stock jQuery implementation:

Percentage of time taken to iterate over 500,000 integers compared to regular `jQuery.each`.

|

Array.forEach (same signature as jQuery) |

Array.forEach (native signature) |

Unvarying ‘this’ |

No call |

| Firefox 3.5 |

117% |

27% |

42% |

35% |

| Firefox 3.6rc2 |

123% |

37% |

36% |

20% |

| Firefox 3.7a1pre |

122% |

33% |

20% |

57% |

| Chrome 3 |

116% |

51% |

47% |

43% |

| Safari 4 |

189% |

188% |

128% |

104% |

| IE 8 |

96% |

88% |

94% |

60% |

| Opera 10.10 |

156% |

63% |

68% |

51% |

A couple of things jump out at us - Array.forEach doesn’t buy us anything if we have to provide a callback to reverse the inputs. If we can use the native forEach signature, it gets much faster, but not by an order of magnitude. Not varying this helps a lot in Firefox and Chrome - I suspect some runtime optimizations kick in if this stays the same, but not if it’s changing. The overhead of call is significant - it tends to matter more than anything else here. The last thing to note is that, weirdly, Safari 4 is fastest with the stock jQuery.each - I wonder if they’ve optimized specifically for that pattern.

Armed with this knowledge, I customized a copy of jQuery 1.4 to stop referring to this in its uses of each, switched the for loop to call the callback directly instead of using call, and reverse-engineered the performance tests John Resig used for the jQuery 1.4 release notes. Using these tests, I compared my custom version to the released jQuery 1.4.

The result: optimizing array iteration speed made no difference. The real work being done by jQuery (DOM manipulation, etc) totally overshadows any array iteration overhead. Reducing that overhead even by 80% doesn’t matter at all. We learned a few things about how fast Array.forEach is and how setting this in call affects performance, but we haven’t found some magic way to make our code, or jQuery overall, any faster. Furthermore, the only improvement that would have preserved the signature of the original jQuery API was actually slower than the existing implementation! It’s not worth losing this in each for any of these speed gains.

There was one small improvement to jQuery, however - a very small boost to the compressability of the library. Using explicit arguments to each instead of this to refer to the current element being iterated means that YUI Compressor or Google Closure Compiler can use one character for that item, instead of 4 for this (since this is a keyword). In practice, that saved about 197 bytes out of 69,838 - still not a huge win. But I like to avoid using this in my each anyway, just so I get to use semantically meaningful variable names, so it’s nice to see that I’m saving a byte or two along the way.

PS: Aside from the “jQueryness” of it, I wondered why each set this to the current element in the array anyway. I have one idea - if this is set to the current element in the array for each invocation of the callback, you can do cleaner OO-style JavaScript. For example, let’s say you have a Dialog object that has a close method. Of course the close method would just use this to refer to the object it’s a member of. But if you had an array of Dialogs and wanted to say “$.each(dialogs, Dialog.prototype.close)” and each didn’t set this to each Dialog in turn, everything would get confused. Of course, in jQuery 1.4 you can get around this using jQuery.proxy, which goes ahead and uses apply (a variant of call) anyway.