Note: This post is from 2009, and browsers/libraries have changed a lot since then. Please don’t use any of this information to make decisions about how to write code.

The other day I was working on some JavaScript code that needed to iterate over huge arrays. I was using jQuery’s $.each function just because it was simple, but I had heard from a bunch of articles on the web that $.each was much slower than a normal for loop. That certainly made sense, and switching to a normal for loop sped up my code quite a bit in the sections that dealt with large arrays.

I’d also recently seen an article on Ajaxian about a new library, Underscore.js that claimed to include, among other nice Ruby-style functional building blocks, an each function that was powered by the JavaScript 1.5 Array.forEach when it was available (and degrading for IE). I wondered how much faster that was than jQuery’s $.each, and that got me to thinking about all the different ways to iterate over an array in JavaScript, so I decided to test them out and compare them in different browsers.

This gets pretty long so the rest is after the jump.

I went with six different approaches for my test. Each function would take a list of 100,000 integers and add them together. You can try the test yourself. The first was a normal for loop, with the loop invariant hoisted:

var total = 0;

var length = myArray.length;

for (var i = 0; i < length; i++) {

total += myArray[i];

}

return total;

And then again without the invariant hoisted, just to see if it makes a difference:

var total = 0;

for (var i = 0; i < myArray.length; i++) {

total += myArray[i];

}

return total;

Next I tried a for in loop, which is really not a good idea for iterating over an array at all - it’s really for iterating over the properties of an object - but is included because it’s interesting and some people try to use it for iteration:

var total = 0;

for (i in myArray) {

total += myArray[i];

}

return total;

Next I tried out the Array.forEach included in JavaScript 1.5, on its own. Note that IE doesn’t support this (surprised?):

var total = 0;

myArray.forEach(function(n, i){

total += n;

});

return total;

After that I tested Underscore.js 0.5.1’s _.each:

var total = 0;

_.each(myArray, function(i, n) {

total += n;

});

return total;

And then jQuery 1.3.2’s $.each, which differs from Underscore’s in that it doesn’t use the native forEach where available, and this is set to each element of the array as it is iterated:

var total = 0;

$.each(myArray, function(i, n){

total += n;

});

return total;

I tested a bunch of browsers I had lying around - Firefox 3.5, Chrome 3, Safari 4, IE 8, Opera 10.10, and Firefox 3.7a1pre (the bleeding edge build, because I wanted to know if Firefox is getting faster). I tried testing on IE6 and IE7 in VMs but they freaked out and crashed. The tests iterated over a list of 100,000 integers, and I ran each three times and averaged the results. Note that this is not a particularly rigorous test - other stuff was running on my computer, some of the browsers were run in VMs, etc. I mostly wanted to be able to compare different approaches within a single browser, not compare browsers, though some differences were readily apparent after running the tests.

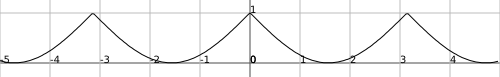

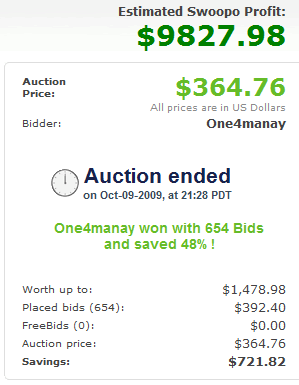

Time to iterate over an array of 100,000 integers

|

for loop |

for loop (unhoisted) |

for in |

Array.forEach |

Underscore.js each |

jQuery.each |

| Firefox 3.5 |

2ms |

2ms |

78ms |

72ms |

69ms |

225ms |

| Firefox 3.7a1pre |

2ms |

3ms |

73ms |

29ms |

34ms |

108ms |

| Chrome 3 |

2ms |

2ms |

35ms |

6ms |

5ms |

14ms |

| Safari 4 |

1ms |

2ms |

162ms |

16ms |

15ms |

10ms |

| IE 8 |

17ms |

41ms |

265ms |

n/a |

127ms |

133ms |

| Opera 10.10 |

15ms |

19ms |

152ms |

53ms |

57ms |

74ms |

I’ve highlighted some particularly interesting good and bad results from the table (in green and red, respectively). Let’s see what we can figure out from these tests. I’ll get the obvious comparisons between browsers out of the way - IE is really slow, and Chrome is really fast. Other than that, they each seem to have some mix of strengths and weaknesses.

First, the for loop is really fast. It’s hard to beat, and it’s clear that if you’ve got to loop over a ton of elements and speed is important to you you should be using a for loop. It’s sad that it’s so much slower in IE and Opera, but it’s still faster than the alternative. Opera’s result is somewhat surprising - while it’s not particularly fast anywhere, it’s not nearly as slow as IE on the other looping methods, but it’s still pretty slow on normal for loops. Notice that IE8 is the only browser where manually hoisting the loop invariant in the for loop matters - by almost 3x. I’m guessing every other browser automatically caches the myArray.length result, leading to roughly the same performance either way, but IE doesn’t.

Next, it turns out that for in loops are not just incorrect, they’re slow - even in otherwise blazingly-fast Chrome. In every browser there’s a better choice, and it doesn’t even get you much in terms of convenience (since it iterates over indices of the array, not values). Safari is particularly bad with them - they’re 10x slower than its next slowest benchmark. Just don’t use for in!

The results for the native Array.forEach surprised me. I expected some overhead because of the closure and function invocation on the passed-in iterator function, but I didn’t expect it to be so much slower. Chrome and Safari seem to be pretty well-optimized, though it is still several times slower than the normal for loop, but Firefox and Opera really chug along - especially Firefox. Firefox 3.7a1pre seems to have optimized forEach a bit (probably more than is being shown, since 3.7a1pre was running in a VM while 3.5 was running on my normal OS).

Underscore.js each‘s performance is pretty understandable, since it boils down to native forEach in most browsers, it performs pretty much the same. However, in IE it has to fall back to a normal for loop and invoke the iterator function itself for each element, and it slows way down. What’s most surprising about that is that having Underscore invoke a function for each element within a for loop is still 10x slower in IE than just using a for loop! There must be a lot of overhead in invoking a function with call in IE - or maybe just in invoking functions in general.

Lastly we have jQuery’s each, which (excluding for in loops) is the slowest method of iterating over an array in most of the browsers tested. The exception is Safari, which consistently does better with jQuery’s each than it does with Underscore’s or the native forEach. I’m really not sure why, though the difference is not huge. IE’s performance here is pretty much expected, since Underscore degrades to almost the same code as jQuery’s when a native forEach isn’t available. Firefox 3.5 is the real shocker - it’s drastically slower with jQuery’s each. It’s even slower than IE8, and by a wide margin! Firefox 3.7a1pre makes things better, but it’s still pretty embarassing. I have some theories on why it’s so slow in Firefox and what could be done about it, but those will have to wait for another post.

It’d be interesting to try out some of the other major JavaScript libraries’ iteration functions and compare them with jQuery and Underscore’s speeds - I’m admittedly a jQuery snob, but it’d be interesting to see if other libraries are faster or not.

It’s worth noting that, as usual, this sort of performance information only matters if you’re actually seeing performance problems - don’t rewrite your app to use for loops or pull in Underscore.js if you’re only looping over tens, or hundreds, or even thousands of items. The convenience of jQuery’s functional-style each means it’s going to stay my go-to for most applications. But if you’re seeing performance problem iterating over large arrays, hopefully this will help you speed things up.